In a group of friends, everyone usually plays a certain role. Whether they are the player in the group, the comedian, the lover boy, or the quiet introvert that surprisingly gets really “turnt” on the weekend, they all express themselves with one another with sayings, memories, and factors that resonate within each of them.

And with the rise of coined terms from social media such as TikTok and Twitter, the fun has just begun. Terms like “Bussin” and “Sheesh” or poses, facial expressions all resonate within these groups and make it enjoyable and share great memories.

Now while this may not be the case for every friend group out there as some may find this cringe, they all still share experiences that make them the friends they are today.

It all boils down to understanding language, tone, and identity. Being able to share these experiences is usually because we can reflect upon them. At the very least we share something in common.

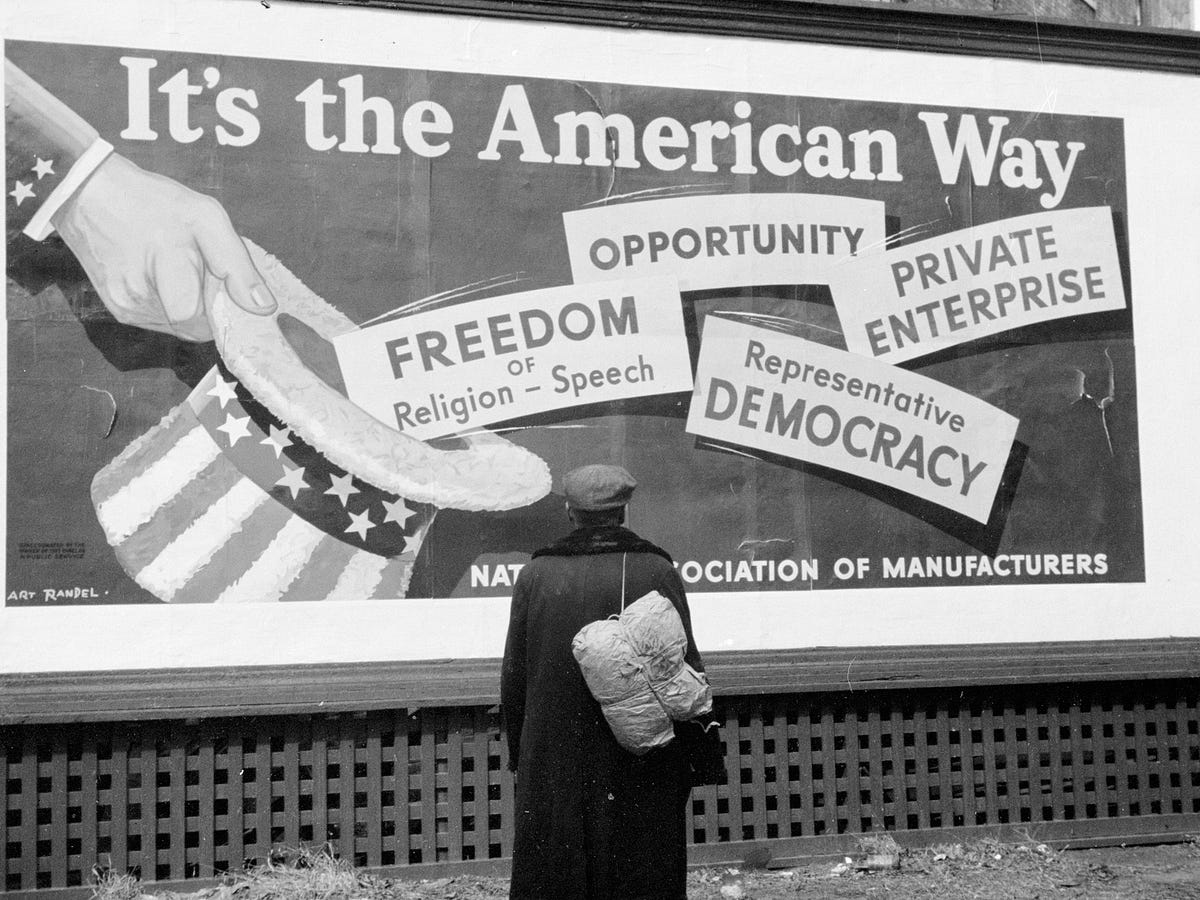

The same can be said about literacy in a social and cultural setting. Our mannerisms may be a result of something we read or watched. Have you ever watched a show, finished it, and found yourself subconsciously becoming someone within the show?

The same thing can be said about literacy and David E. Kirkland of NYU and writer of “We Real Cool”: Toward a Theory of Black Masculine Literacies had this to say.

“Words like “dog,” for example, were frequently used among the cool kids as terms of endearment. Such terms were also used as affirmations of coolness, reserved for those young black men who, according to the cool kids, were “down,” a word they used to signify allegiance.”

These terms make us feel comfortable and help us understand one another better. These terms of endearment show us our upbringing, unity and friendship

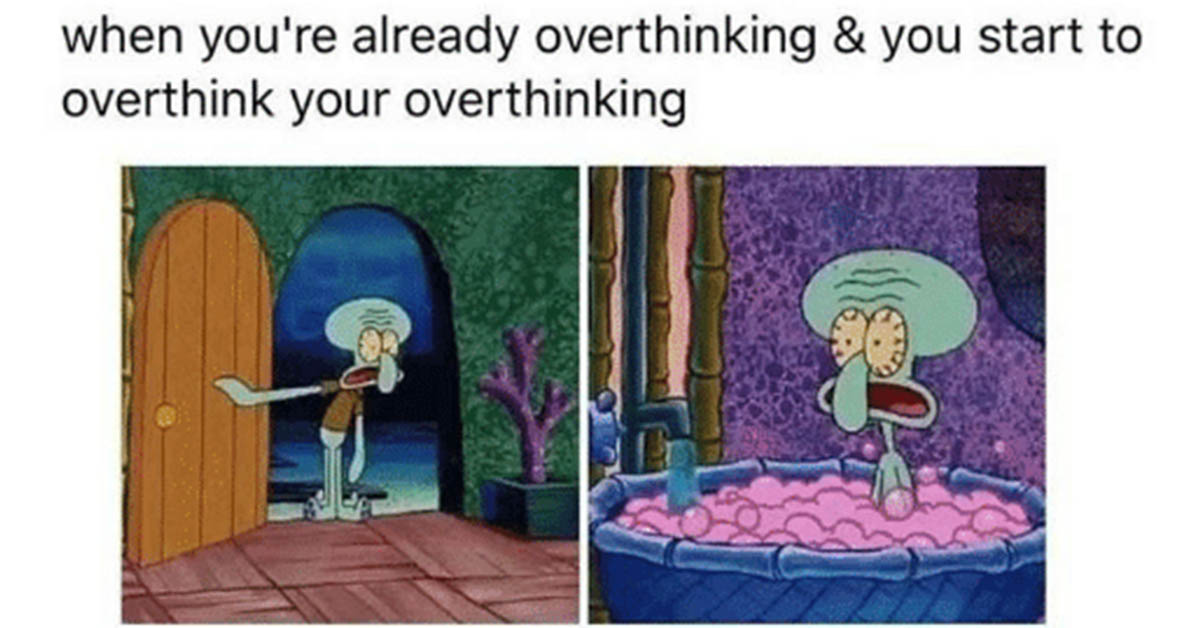

I am an iterative thinker (or a ruminator, if my mother is to be believed). I’m also an iterative reader, listener, writer. Recursion continues to show up in my life in many ways, and I am still learning to suspend my anxiety about it (a semester of

I am an iterative thinker (or a ruminator, if my mother is to be believed). I’m also an iterative reader, listener, writer. Recursion continues to show up in my life in many ways, and I am still learning to suspend my anxiety about it (a semester of