20 million active Twitter accounts are fake. 20 million opinions, retweets and participants in political movements are fake. That is according to only one article, it seems likely that there are many more. According to the scholars Laquintano and Vee between ¼ and 1/3 of users in support of Donald Trump or Hillary Clinton were fake bots as of 2016.

Example of an Obvios Bot Tweet

So, the simple conclusion is truth is doomed, right? How can the millions who receive their news and political opinions from the “unbiased and democratic” Twitter expect to make informed voting decisions if they are not actually engaging in civil discourse but capitalistic vote manipulation through conversation with Twitter bots?

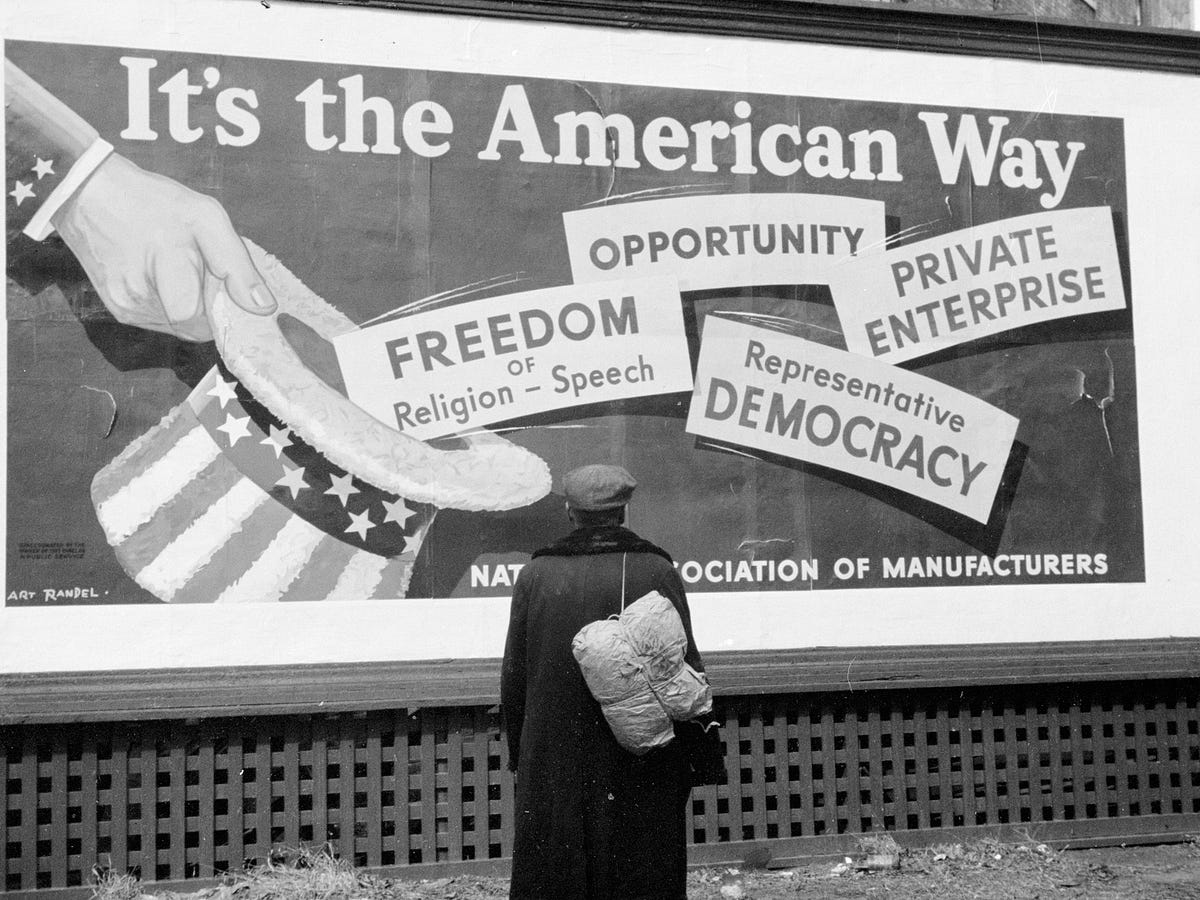

Well, the truth is the cards have been stacked against voters. Capitalism has always had a heavy hand in politics. From the very beginning voting itself was restricted to those who had a large economic stake in America: white male landowners. As voting rights expanded more and more methods of influencing less affluent voters developed. The most obvious is advertisements from newspapers to radio ads or large donations to politicians’ campaigns.

Political Advertising During the Great Depression

Today, however, this manipulation is more subtle than ever. According to Laquintano and Vee many of the fake bots used to sway political opinions do so by being able to pass a Turing test or through their sheer numbers. In other words, bots can pass as real humans as determined by unknowing humans. If a bot is discoverable often there are so many of these bots their discovery is inconsequential to their movement.

So, manipulation has always existed in American democracy. The only difference is now it is not obvious where it is coming from. For example, further subtle manipulation in politics may be vote counting itself, as many sources indicate Russian tampering with the 2020 Presidential election vote counts.

90% of news outlets are owned by just six companies. If anyone remembers the play Newsies, it will simply take collusion between those six firms for major social justice issues or pieces of news to be ignored and unnoticed by Americans. Not to mention if these firms ever decided to cover an issue in a certain manner to sway votes, they could entirely sway the views of most Americans.

Joseph Pulitzer: Villian of Newsies Who Colluded With Other Newspapers to Stop the Newsies’ Strike

So, that about covers it. News outlets, social media, and even voting itself may be shot. Undoubtedly historians who will study political literature in the future will have an extremely difficult time deciding which news articles, Tweets and even social movements were entirely fashioned by capitalistic stakes in politics. So, yes, we are doomed, just as doomed as the political system always has been in America.

All this means for us is that the literature of past political movements was a bit more genuine. Today any political literature is less about fairness or equality than it is about greasing the pockets of whoever is interested in some manner we are yet to understand. So, how to stay sane? Unplug. I have never heard someone tell me reading political news has made them happy. In fact, I can say from experience only the opposite is true. As long as, America can make someone richer than us a buck, our system will work and we will have bread on the table.

Millet: Angelus– A Couple Prays in Thanksgiving for Their Day’s Work and Harvest Through the Angelus Prayer, Evocative of the American Ethos