In a previous entry, you learned how to create a set of flashcards on H5P. Flashcards are an excellent study tool, but some students may simply memorize the cards themselves rather than actually learning the underlying concepts. For that reason, an excellent tool to reinforce the material on the flashcards is the self-grading quiz. Below is an example quiz based on this flashcard set:

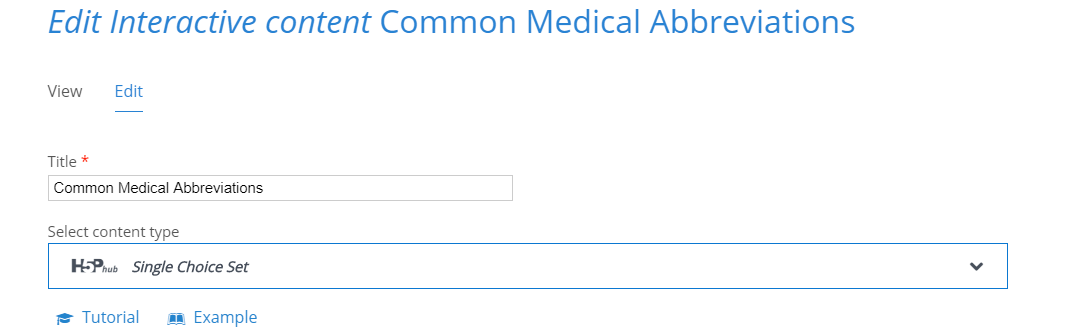

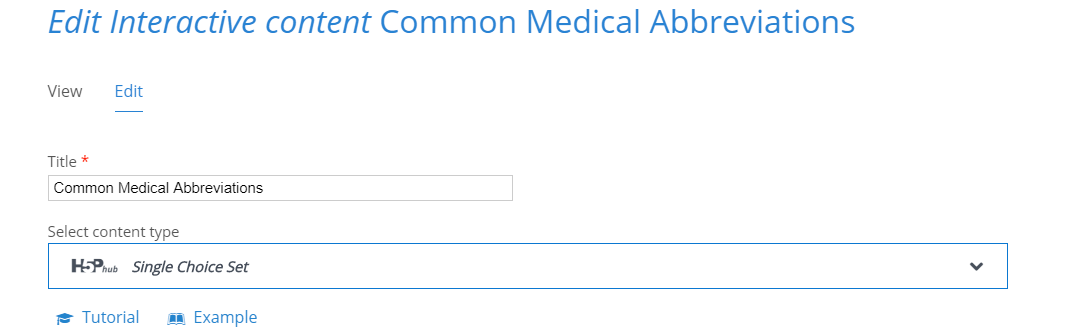

To create an interactive quiz of your own, go to the H5P content creation screen and select “single choice set” from the drop down menu.

The first dialogue box will set the title for the entire quiz.

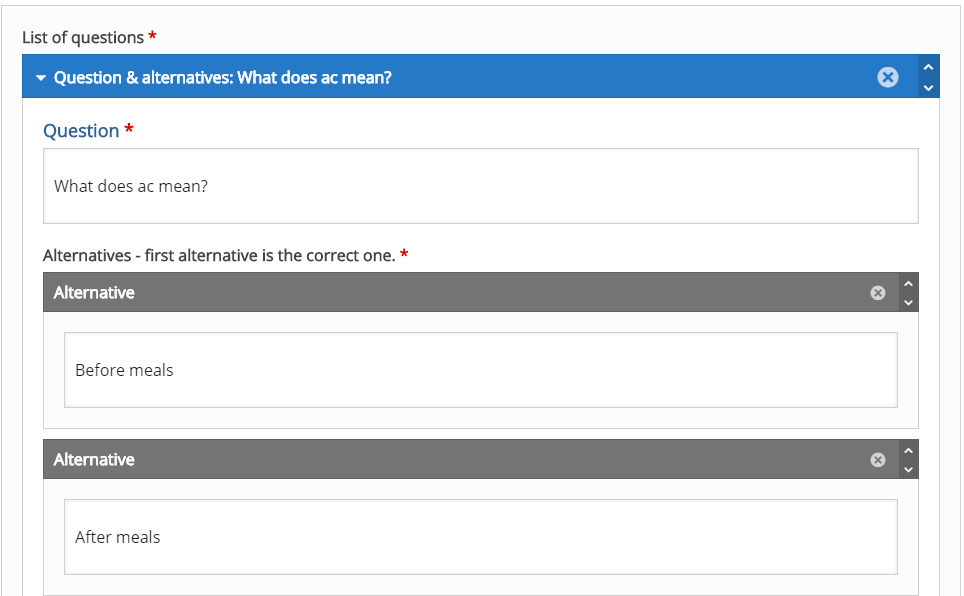

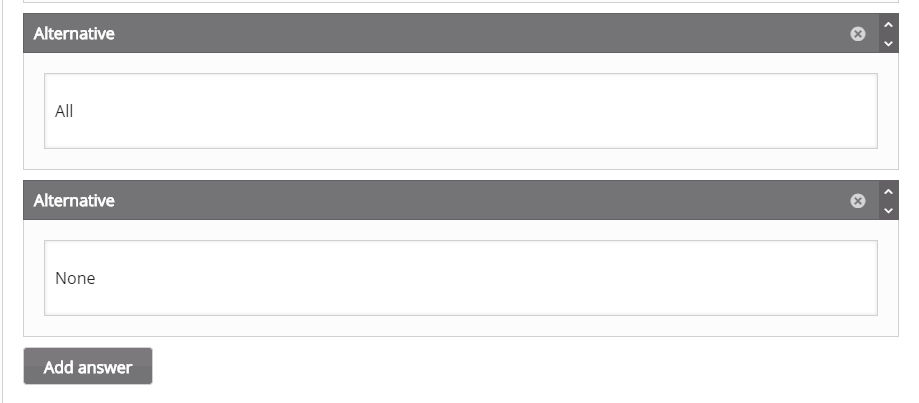

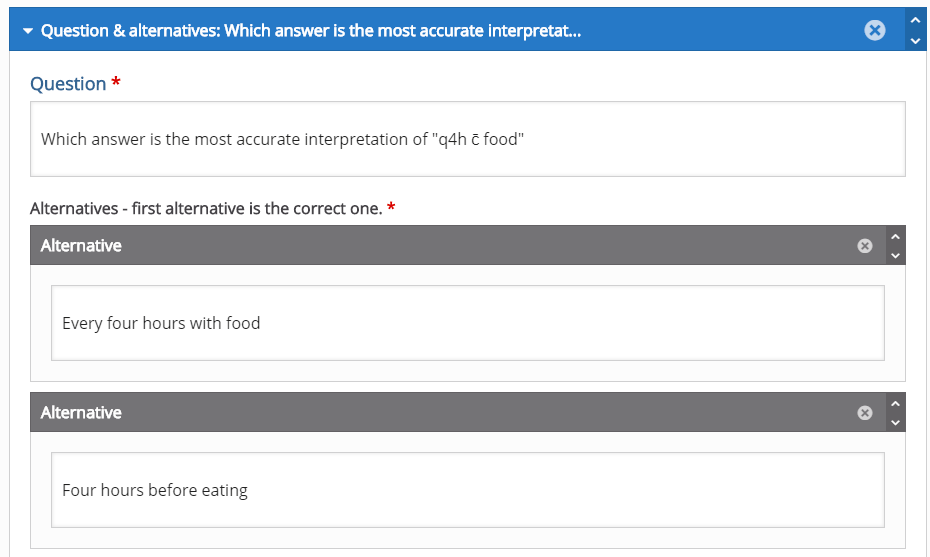

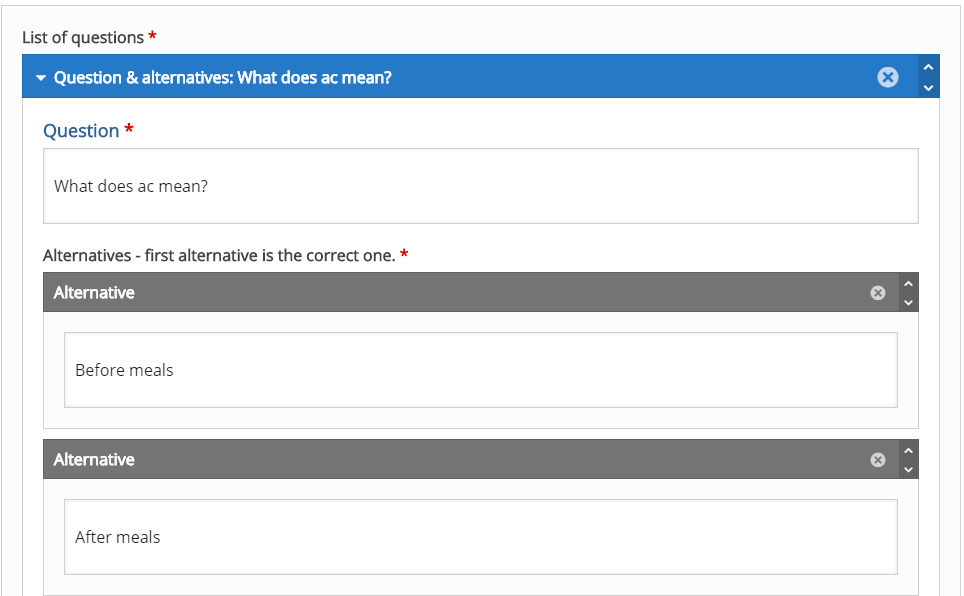

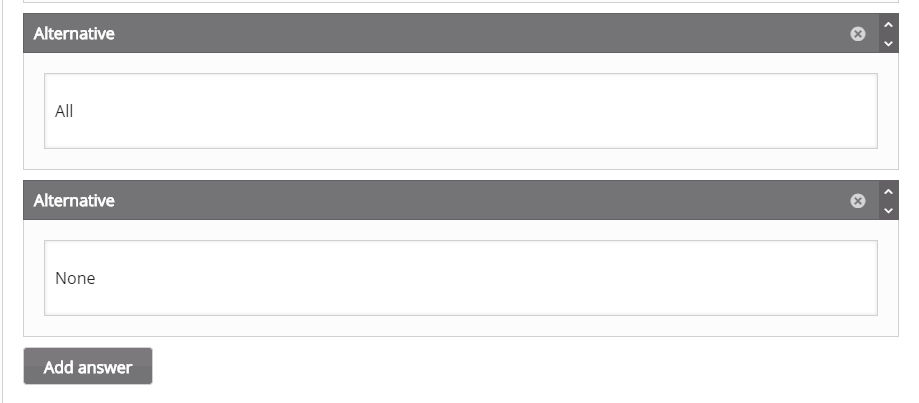

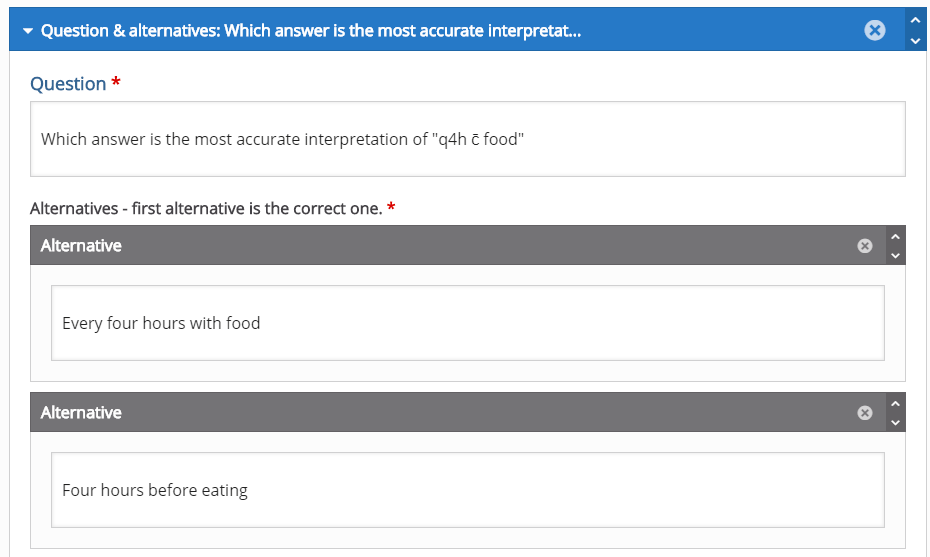

In these dialogue boxes, you will fill out the question and up to four possible answers. The form will default to two possible answers. You must click the grey “add answer” button to create new blank answer dialogue boxes. It is important to note that the first dialogue box is for the answer that you want the quiz to grade as correct. It is also important to note that the quiz will randomize the order of all four possible answers. This will be important later.

Question 5 is an important example because I chose to include an “all of the above” style answer. However, it is important to note that even though this answer is the last one on the form, it will not necessarily appear as the last question within that answer set (eg: it could appear answer 1, 2, 3, all of the above OR 1, all of the above, 3, 4 etc.). For this reason, you should choose a wording similar to “all answers are acceptable” and avoid answers that make reference to other answers in terms of their location within the answer set.

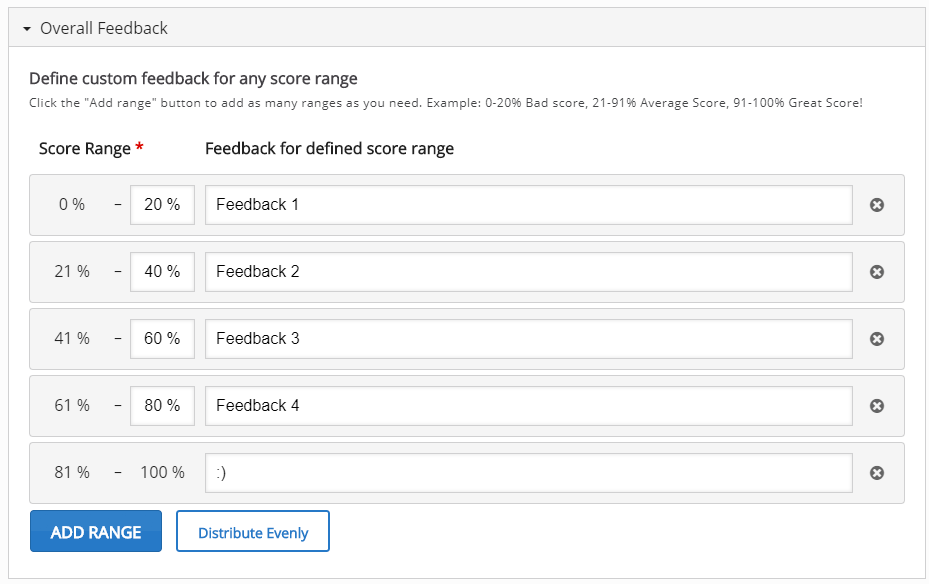

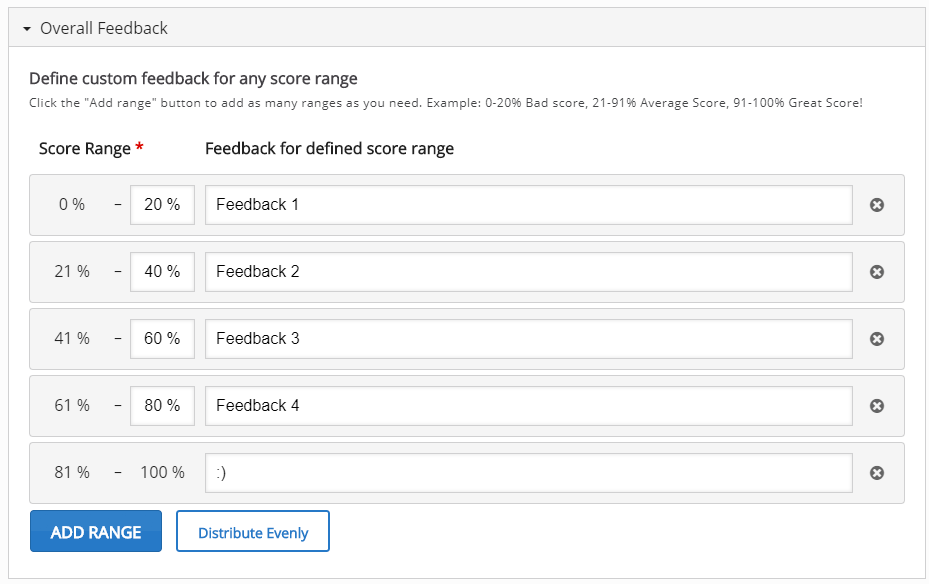

This image shows the grade ranges you can choose. This section starts relatively blank. To create the grade ranges for this quiz, I clicked the blue “add range button” until there was one grade range per question, then clicked the white “distribute evenly button.” However, If you choose to, you can manually adjust the grade ranges. The text boxes next to each grade range are the messages that will appear if a student receives a given score.

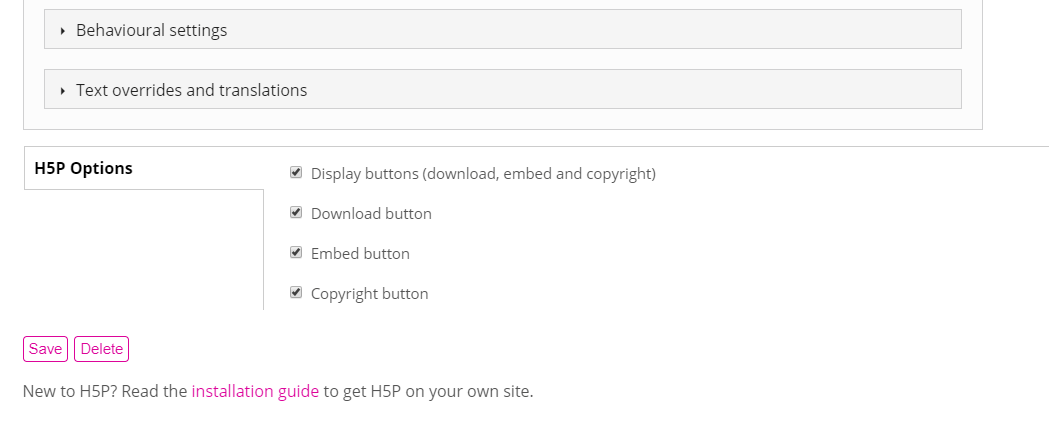

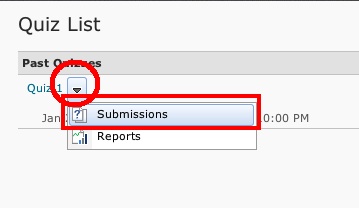

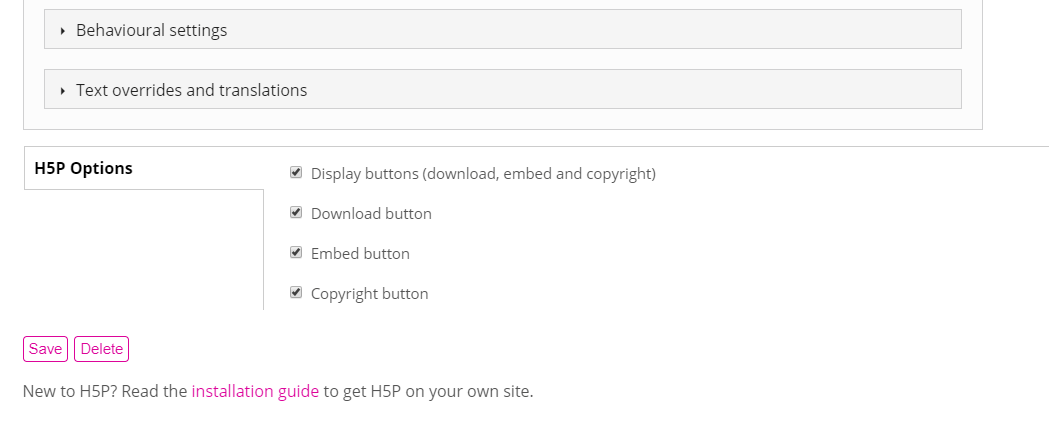

In these final steps you can further customize the behavior of the quiz and the messages and prompts that appear on the quiz. For this tutorial, these settings have been left as default, however I encourage you to play with these settings and contact CON IT for any additional assistance you may need. As with other content on H5P, you can edit the download, embed, and copyright buttons that will appear. Once you are happy with your quiz, click the pink save button. If you followed these directions, you should end up with a quiz identical to the one at the beginning of this blog post. Once you have completed your quiz, it can be embedded into Carmen or into your u.osu blog for use as a study tool. For help configuring your quiz, or assistance in implementing an H5P quiz in your classes or study groups, please contact CON IT for additional assistance.